The social contract sauce. Contains: Europol, big data, spyware, employment contracts (May contain traces of privacy)

Credit: Europol

On the 19th and 20th of October I was invited to participate to the Europol Cybercrime Conference held at the headquarters of Europol in Den Haag, Netherlands.

This year’s theme was “The evolution of policing” and it brought together law enforcement (“LE”) agents, private and public cybersecurity experts, Data Protection Officers, researchers and professors from all around the world to answer the question whether there’s a need for a social contract in cyberspace.

Although it may seem that the topic of cyber policing is somewhat distant from the LeADS’ scope, the two are surprisingly connected. Many links exist between aspects of European cybersecurity and law enforcement to key issues in the LeADS project, such as the regulation of cyberspace within contrasts of individual freedom vs public interests, the concept of trust and its declensions in law enforcement of the metaverse, fair vs effective data governance, the use of big data vs machine learning, as well as opportunities and challenges of portability, interoperability and re-usability of data for policing purposes.

Introducing one of the first debates, the Commissioner of Home Affairs Ms. Ylva Johansson -one of the only 8 female speakers out of 39 in the conference–opened with the statement that security is the social contract. It is understandable why Rousseau’s social contract idea be intertwined with that of demanding security to the power of the state, with its checks and balances, and take away the pursuit of justice from the hands of people who are driven by their individualistic amour propre. However, there is a part of that reading that is missing that I personally believe is the most important, and which has been too many times discounted throughout the conference-sometimes accidentally, sometimes wilfully—that is the following: in Rousseau’s vision of the social contract each person should enjoy the protection of the state “whilst remaining as free as they were in the state of nature.”

Security is a necessary pillar for the existence and evolution of democratic societies, but it is only a starting point, one of the bases, not the social contract itself. It is conditional to the existence of the social contract, but it is far from exhausting its functions. There is so much more that citizens of a democratic society can and should expect form a national state other than the mere prevention and investigation of crimes, offline and online. Examples are, the upholding of policies for improving social welfare, civil rights, healthcare, protection from discrimination or anti-competitive behaviors, and so on. Understanding the social contract in its miopic security meaning would legitimize Orwellian-like states that secure people through mass surveillance and social credit scoring. Privacy, in this context, is the first line of protection to that Rousseau’s individual freedom, together with personal data protection that functions as proxy to the protection of every other fundamental right, freedom and legitimate interest enshrined in the European “constitutions“. It is in the anticipation of the moment for law enforcement action before the violation of fundamental rights that lays the essence of the social contract, while all fundamental rights are in the balance—and security is only one among many.

This underlying leitmotiv of the conference has resurfaced in many occasions. Representatives of law enforcement have repeatedly lamented that bureaucracy concerning rule of law and privacy most times end up dulling investigative tools, for example, when limiting the collection of personal data to specific legal bases, along with the time for its retention and analysis. However, what these laws limit is only the indiscriminate, trawl collection of non-contextual data for unspecified use and unlimited time in case they might come handy in the future. It seems also clear that LE is still holding on to the promise of big data analytics, with its tenet of always collecting and retaining everything possible, while discounting the use of privacy friendlier alternatives powered by machine learning algorithms that do not need such amounts of data, but smaller, sanitized, quality datasets to train and test models. A hybrid system that combines machine learning models to targeted data analysis would reduce dramatically the need for voluminous, noisy, cumbersome, leakable data collection and storage, while respecting privacy of non-interested citizens: the first would help in the hunt for suspicious activities online, while the second circumscribes the area of investigation to only suspected individuals –so upholding proportionality.

LE’s request for more access to data depends on the trust of people in governmental institutions. And such trust is hard to establish, but breaks easily. One investigative journalist, in this regard, raised the thorny issue about the use of the Pegasus spyware by European LE agencies. The reference was to the spyware found installed on phones belonging not only to criminal suspects, but also to journalists, European prime ministers, members of parliament, and civil society activist; in total, it collected 4 petabytes of data of innocent people before being exposed by Citizen Lab, a Canadian research centre. Mutatis mutandis, but with the same critical lenses, we should look at the current EDPS legal action against Europol. Pending before the ECJ, the EDPS wants to fight the legitimacy of the new articles 74a and 74b of the Europol Regulation that retroactively legalize Europol’s processing of large volumes of individuals’ personal data with no established link to criminal activity. It is no wonder that the happening of such events erode the trust for people in LE. Transparency in operations and decision making could have played a positive role in establishing trust between private citizens and LE, yet in these occasions the lack thereof backfired abundantly–perhaps irremediably.

The problems that LE is facing is not only the need for more data and easier access to it, but also that data be formatted, visualized and shared in a way that is actionable. Data actionability, in the context of coordination and crime prevention, requires both understandability by operators (starting with human-readability) and portability to receiving system (starting with machine-readability). Unfortunately, on the side of operators, many high-level officers lamented the extreme lack of human resource with data sciences skills, which is in stark contrast with their pledge to big data and their concomitant jettisoning or not-hiring of digitally competent personnel coming from civil society or the private sector—most open vacancies at Europol are restricted to seconded officials. On the side of portability and interoperability of data and systems there is a lack of standardization, which renders communications and coordination among the national police forces cumbersome and inefficient–much like in the European market for data.

All in all, the conference left a bitter taste in my mouth. One of the biggest tenets that years of research in regulatory aspects of technology taught me is that technology regulation is complex. To make sense of it, analysts need a granular, expert and sensible look at the specific context in which technologies are deployed, but also an understanding of their effects in the macroscopic picture of international geopolitical, economic and social systems. Cybercrime prevention and repression is one of such complex systems, whose analysis and management need multidisciplinarity, of the box thinking, lateral and longitudinal vision, innovative skills, state of the art tools. But most importantly, this evolutionary process of policing will need to be built on the essence of Rousseau’s social contract, the credo that security is corollary to freedom-not the other way around–and it must serve its purposes.

Unfortunately, at least from an organizational standpoint, it seemed that Europol is following a different-if not altogether opposite–path to reach its security goals: the call for more data retention, the discounting of machine learning, the lack of expertise in digital skills and the admission to have difficulties in acquiring some, the hunt for human and technical resources from only inside LE seems less like an evolution of a trustworthy, pioneering, EU values-driven agency, and more like a gradual transformation into an old-school police department.

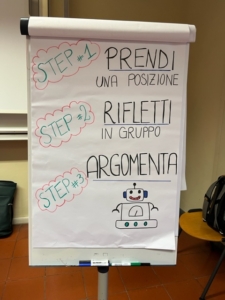

The procedure was so designed to reach goal 2 of the game, to critically evaluate facts and put forward the most convincing argumentation, and 3, to learn how democratic debates develop by mimicking the actual rules of parliamentary democracies (albeit – in a slightly simplified version).

The procedure was so designed to reach goal 2 of the game, to critically evaluate facts and put forward the most convincing argumentation, and 3, to learn how democratic debates develop by mimicking the actual rules of parliamentary democracies (albeit – in a slightly simplified version).

The European Data Protection Supervisor gave a presentation and mentioned the advisory document published by EDPS, titled: Guidelines for assessing the proportionality of measures that limit the fundamental rights to privacy and the protection of personal data. The first day of the conference continued with the presentations of the valuable speakers.

The European Data Protection Supervisor gave a presentation and mentioned the advisory document published by EDPS, titled: Guidelines for assessing the proportionality of measures that limit the fundamental rights to privacy and the protection of personal data. The first day of the conference continued with the presentations of the valuable speakers. the Regulation was extended for one more year even though its problematic features make the topic worth discussing further.

the Regulation was extended for one more year even though its problematic features make the topic worth discussing further.

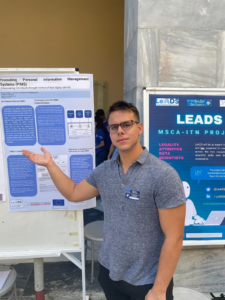

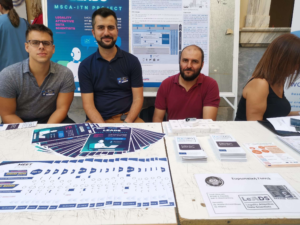

An annual event in which all universities and research centers of the Attica region gathered to present the ongoing activities they are involved in. We were present at the “European Corner”, where the MSCA fellows presented their posters, ESRs addressed questions from not only the audience but also other researchers and students of both universities and schools.

An annual event in which all universities and research centers of the Attica region gathered to present the ongoing activities they are involved in. We were present at the “European Corner”, where the MSCA fellows presented their posters, ESRs addressed questions from not only the audience but also other researchers and students of both universities and schools.