Op-Ed: “Business-to-government data sharing on the Data Act: between a rock and a hard place” by Barbara Lazarotto

This Op-Ed was originally published on the EU Law Live Blog and can be accessed here

The Data Act proposal is the latest Regulation of the European Data Strategy to have been proposed in February 2022, with the main objective of removing the obstacles to the circulation of data and creating value from it through business-to-consumers, business-to-business and business-to-government data sharing. The Proposal has gained attention due to its innovative proposition, which aims to address complex and different concerns related to the data economy depending on the area of regulation. The Act addresses the market dominance within the field of IoT products, cloud and edge computing and aims to advance the European data economy through data reuse, including the public sector. Yet, the trajectory of the Data Act proposal has been a bumpy ride with a lot of back and forth between pushing for more sharing of data and furthering restrictions on data access, mostly influenced by stakeholder positions when it comes to business-to-government data sharing. In this Op-ed, I will further explore the road of the Proposal so far and how the modifications throughout the legislative process still support the industry position, and what this means for the future of business-to-government data sharing in Europe.

A wrestle between public sector access and industry interests

Before reaching the Council, the Data Act Proposal was mostly focused on data access by consumers and further sharing of data for the purposes of enhancing market competition. Chapter V, fully dedicated to business-to-government data sharing, was heavily influenced by public health management during the COVID-19 pandemic, in which access to privately held data by the public sector was considered essential. However, the use of private data is not only advantageous in exceptional circumstances but can also be essential to support the public sector in policymaking, a hypothesis which was not fully addressed by the Data Act proposal.

Yet, when the Czech Presidency took over the rotating presidency of the EU Council from 1 July to the end of 2022, it took a pro-public sector approach by broadening the scope of the business-to-government data sharing beyond the original proposal, pushing it forward the circumstances of data access for the purposes of enacting public tasks. Prague pushed for stronger enactment of mandatory data-sharing regulation, although the text significantly reduced the scope of business-to-government data sharing to only the European Commission and the EU Agencies whereas before it applied to all EU institutions. Empowering clauses were enhanced, which allowed the public sector to challenge requests for compensation by organisations holding data. One of the main additions was the further specification of tasks in the public interest provided by law that might justify the request for privately held data, such as local transport, city planning and infrastructural services. This opened an avenue of possibilities for the use of private data for public purposes such as the development of smart cities.

Following the end of the Czech rotating presidency, Sweden took over in January 2023, and contrary to the previous Presidency, it turned to the industry segment by proposing the reduction of the scope of the act to all data processed via systems subjected to Intellectual Property rights or specific know-how of the data holder. Additionally, the possibility for data holders to deny the sharing of data based on any trade secret was introduced, strengthening the business position considerably. When it comes to business-to-government data sharing, the scope of data was restricted only to non-personal data, a request that was present in the EDPB-EDPS Join Opinion 02/2022 on the Data Act.

Once the Proposal reached the Parliament for the trilogues, business-to-government data-sharing regulation took a considerable step back again, following the industry organisations’ joint statement expressing the sector’s concern with the protection of trade secrets. Some hypotheses on the use of private data by governments for the fulfilment of tasks in the public interest were removed from Recital 57, although in my opinion the removal does not impact the interpretation of article 15(b) of the proposal. Most recently, a common position was reached by Coreper which has highlighted again the necessity to ‘fine-tune’ the terms of business-to-government data sharing regulation. (for more information, see the Council press release here)

Between a rock and a hard place

Following this retrospective analysis, it is possible to observe that the main objective of the Data Act is to empower consumers by giving them access to data generated by ‘smart devices’ and that the business-to-government data-sharing provisions are not the focus of the Regulation, in spite of the pro-public sector approach by the Czech Presidency. The European Parliament strengthened the industry pleas, by enhancing trade secrets protection and restricting the possibilities of data access by governments.

Therefore, one question remains. Given the abovementioned trajectory, what is the future of business-to-government data sharing in the EU?

The Data Act is a horizontal proposal which aims to propose basic data-sharing rules for all sectors, leaving room for a specific regulatory framework for specific sectors. A specific regulatory framework would need to fully address the challenges faced by the public sector when accessing privately held data due to its peculiarities and its public interest purpose. A specific regulation would be essential to coordinate how already existing regulations (e.g., General Data Protection Regulation 2016/679, Data Governance Act Regulation 2022/868, Data Base Directive 96/9/EC), Member States’ legislation (such as the French loi n° 2016-1321 du 7 octobre 2016) and future proposed regulations such as the Data Act will co-exist. Additionally, a specific regulatory framework would have to face challenges, such as how to involve the private sector in public matters without causing a ‘corporatisation’ of the public sphere while protecting public values such as transparency of administration and the interest accessing certain types of data for public purposes.

So far, I still cannot see the end of the tunnel. Due to the backseat position of business-to-government data sharing in the proposal, it is safe to affirm that the future of B2G does not depend greatly on the Data Act. At the same time, the regulatory measures brought by the proposal are too restricted to alter the status quo of business-to-government data-sharing agreements that are already taking place, and they definitely do not address the issues faced by the public sector when settling agreements, such as power imbalances, unfair contractual clauses, and restrictions on the use of data. Thus, there is only hope that a Regulation that addresses these points is in the Commission’s pipeline.

Barbara Lazarotto is a PhD researcher at the Vrije Universiteit Brussel, a member of the Law, Science, Technology and Society Research Group (LSTS) and a Marie Curie Action Fellow at the LeADS Project.

Her findings point out that both DGA and EHDS don’t offer a detailed explanation of what terms could entail. Moreover, all three of the legislations will be regulating an intersecting ground, thus, their differences have a possibility to create discrepancies. Her study sought to discuss the similarities and differences between the referred legislation in terms of the mentioned concepts and make sense of their interplay.

Her findings point out that both DGA and EHDS don’t offer a detailed explanation of what terms could entail. Moreover, all three of the legislations will be regulating an intersecting ground, thus, their differences have a possibility to create discrepancies. Her study sought to discuss the similarities and differences between the referred legislation in terms of the mentioned concepts and make sense of their interplay.

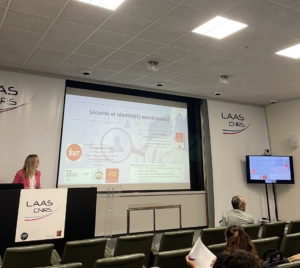

The workshop was divided into three sessions the first Session focused on thesis presentations, Session 2 focused on presentations on the topic of cybersecurity and Session 3 was entered on material, logical and systemic security. Prof. Jessica Eynard, one of the supervisors of the LeADS Project, and Giorgia Macilotti one of LeADS collaborators presented their research in Session 2 the topic Sécurité(s) et identité(s) numérique(s).

The workshop was divided into three sessions the first Session focused on thesis presentations, Session 2 focused on presentations on the topic of cybersecurity and Session 3 was entered on material, logical and systemic security. Prof. Jessica Eynard, one of the supervisors of the LeADS Project, and Giorgia Macilotti one of LeADS collaborators presented their research in Session 2 the topic Sécurité(s) et identité(s) numérique(s).

when this technology is used in health data tasks, the practice becomes even more complex. because health data qualifies as sensitive data which attracts stricter rules regarding its processing.

when this technology is used in health data tasks, the practice becomes even more complex. because health data qualifies as sensitive data which attracts stricter rules regarding its processing.

Also on April 11-13, Aizhan was present offline at the 33rd Madrid International Conference on “Law, Education, Marketing and Management” (LEMM-23).

Also on April 11-13, Aizhan was present offline at the 33rd Madrid International Conference on “Law, Education, Marketing and Management” (LEMM-23).