Wrapping Up the LeADS Project: Highlights

After four intensive years of research and collaboration, the LeADS project reaches its conclusion in December 2024. In this final blogpost, we look back at some of the highlights of the project.

LeADS brought together 7 beneficiaries and 7 partners spanning 9 different European countries. The project welcomed 15 Early Stage Researchers (ESRs) from 13 countries across the globe, who worked collectively to advance interdisciplinary research at the intersection of law and technology. Together, they tackled the challenges of the ongoing digital transformation shaping our societies.

Under the leadership of Scuola Superiore Sant’Anna (SSSA) and project coordinator Prof. Giovanni Comande’, LeADS demonstrated the immense potential of interdisciplinary and cross-cultural research. It fostered synergies and showcased the benefits of collaboration among experts from diverse fields and backgrounds.

While this newsletter cannot encompass all the highlights of the past four years, it’s worth noting the many significant achievements, such as the conferences organized by the consortium in Brussels, Crete, Toulouse, Kraków, and Pisa. Other key activities included online webinars and lunch seminars, numerous publications, and the conference presentations delivered by the ESRs.

For those interested in exploring the extensive range of activities conducted during the LeADS project, we encourage you to browse the more than 150 posts on our blog and the over 30 newsletters that have documented the journey.

A major final output of the project is on the horizon. As part of the concluding deliverables, the ESRs have created dissemination pieces that address critical interdisciplinary challenges at the intersection of law and technology. These pieces are tailored for a general, non-specialist audience, translating complex research into accessible narratives. This collection will soon be available in a special issue of the open-access journal Opinio Juris in Comparatione.

As we bid farewell to this transformative project, we extend our heartfelt gratitude to everyone who contributed to its success. From the dedicated beneficiaries and partners to the brilliant ESRs, your efforts have made LeADS a remarkable journey. To our followers and readers, thank you for accompanying us on this incredible adventure.

Conducting Research in the LeADS Crossroads

Since the beginning of the LeADS project the 15 ESRs conducted research in four different Crossroads, understood as challenges that have to be addressed in data-driven societies: 1. Privacy vs Intellectual Property 2. Trust in Data Processing & Algorithmic Design 3. Data Ownership 4. Empowering Individuals.

Within those four Crossroads the ESRs engaged with each other’s topics to refine their own research. The ESRs had the possibility to present and discuss their research progression at several

occasions, for instance, during a poster walk session at the 18th International Conference on Artificial Intelligence Applications and Innovations, that took place in June 2022 in Crete, Greece. Furthermore, ESRs recorded short videos on the content and goals of their individual research that are available on the LeADS YouTube channel.

In addition to this individual research, the ESRs also have been collaborating closely to collectively address the respective challenges of their crossroads.

The first result of this intensive process was a 180-pages introductory report that constituted the first scientific output of the LeADS and functioned as a backbone for following research activities.

Subsequently, the ESRs were reshuffled into 6 interdisciplinary groups to draft and publish the following 6 Working Papers:

(i) The Flawed Foundations of Fair Machine Learning

(ii) Contribution to Data Minimization for Personal Data and Trade

(iii) Transparency and Relevancy of Direct-To-Consumer Genetic Testing

(v) From Data Governance by Design to Data Governance as Service

The research that was conducted by the ESRs in the writing process of the WOPAs was further used to refine the initial Crossroad Report that had been redacted in 2022. This second iteration of the Crossroad report therefore went beyond the state-of-the-art analysis and pushed ESRs to intensify intra- as well as inter-crossroad collaborations to extent, complement, and refine their analysis. This new report was amended with a particular focus on how their research relates to specific stakeholder needs.

At the same time, each Crossroad recorded Videos where they reflected on and delved into some of the key questions they have been investigating. Furthermore, the ESRs discuss how their research has evolved over the trajectory of the LeADS project. The four videos can be watched on the LeADS YouTube channel.

Finally, during a conference in Brussels the ESRs presented discussion games they had been developing to disseminate their research to the general public. All games are available online: Crossroad 1 ‘Know-IT-All’; Crossroad 2 ‘Jury Trials’; Crossroad 3 ‘SynergyLegal’; Crossroad 4 ‘Privacylandia’

Two Months of Intensive Interdisciplinary Training

On four occasions the ESRs met to attend a cross-interdisciplinary training program which constitutes a fundamental part of the LeADS project.

Between November-December 2021, the ESRs first met each other in Pisa at Scuola Superiore Sant’Anna for their first three weeks of training focusing on a variety of topics ranging from big data analytics and applications, data mining and machine learning, or research ethics and methodology.

Between March and April 2022, the ESRs met again for two weeks Pisa. Whereas the first training modules involved mainly subjects related to computer and data science, this training module focussed more on the legal perspective.

The next training module brought the ESRs to the beautiful island of Crete. In addition to courses on law and data science, the ESRs were divided into interdisciplinary groups for practical sessions to deploy a smart contract and to analyse and identify weaknesses in a security protocol.

Finally, the last module brought the ESRs together in Kraków at Jagiellonian University in September. The last training module concluded with reflections on data and ownership as well as discussions on problems the ESRs have encoun-tered throughout their first year of research.

Throughout all training modules the ESRs benefitted from the combined academic expertise that is available at the 7 beneficiaries of the Leads project. In addition to courses taught by academics, the ESRs also had the chance to get insights into how problems and current discussions in the data economy are perceived by businesses, thanks to the active participation of the LeADS partners (Innov-Acts, ΒΥΤΕ COMPUTER S.A., Intel, the Italian Data Protection Authority, the Italian Competition Author-ity, Tellu, INDRA, and MMI).

From Theory to Practice: Secondments

In addition to their individual desk research, the ESRs have had the opportunity to work at beneficiaries and partners of the LeADS project. During their second and third year, our ESRs began travelling to and working at their secondment destinations.

These secondments fulfiled a central role in the LeADS project as they enabled the ESRs to engage with prominent academics and practitioners and to gain practical experience in how their research might translate into real life problems encountered by businesses.

Furthermore, they enabled our ESRs to complement their research with practical experiences gained throughout the secondment and adapt their research project accordingly.

Each ESR completed two secondments. In a series of blog posts, the ESRs wrote about their secondments experience in a reflexive account, providing visibility into their insights and perspectives:

(i) Secondments at BY.TE, AGCM, MMI, and University of Luxembourg

(ii) Secondments at UT3, SSSA, and Tellu

(iii) Secondments at AGCM, GPDP, and VUB

(iv) Secondments at CNR, GPDP, University of Luxembourg and Intel

(v) Secondments at GPDP, University of Piraeus, Paul Sabatier III and AGCM

(vi) Secondments at Indra, SSSA, Tellu, and AGCM

Technology Innovation in Law Laboratories (TILL) Workshop

At two occasions the ESRs met for the Technology Innovation in Law Laboratories (TILL) workshop. Partners of the LeADS project provided real-life cases for the ESRs on different topics such as an investigation by a competition authority against Apple for an alleged abuse of a dominant position in the App market. In small groups, ESRs had to analyze the legal-technical problem posed by each use case and prepare a presentation which was then discussed with the industry partners of the LeADS project.

At two occasions the ESRs met for the Technology Innovation in Law Laboratories (TILL) workshop. Partners of the LeADS project provided real-life cases for the ESRs on different topics such as an investigation by a competition authority against Apple for an alleged abuse of a dominant position in the App market. In small groups, ESRs had to analyze the legal-technical problem posed by each use case and prepare a presentation which was then discussed with the industry partners of the LeADS project.

The TILLS gave our ESRs the possibility to not only test their knowledge on real-life cases, but also to further develop and test their problem-solving, collaboration, time-management and presentation skills. A promotional video on both editions has been published on the LeADS YouTube channel.

Final LeADS Event at Scuola Superiore Sant’Anna

The final event of the LeADS project took place at LeADS beneficiary Scuola Superiore Sant’Anna.

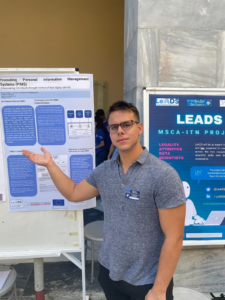

During three days of dynamic activities and intellectual exchange, this culminating event featured a thought-provioking conference on ‘Legally Compliant Data-Driven Society’ and a Poster Walk showcasing the research conducted in the four Crossroads of the LeADS project.

Furthermore, it involved an Innovation Challenge organized in collaboration with the Pisa Internet Festival to address complexities of the AI Act. The challenge brought together participants to develop practical solutions aimed at helping AI developers and deployers navigate the AI Act’s risk classification system and understand the specific requirements that apply to their AI systems.

Furthermore, it involved an Innovation Challenge organized in collaboration with the Pisa Internet Festival to address complexities of the AI Act. The challenge brought together participants to develop practical solutions aimed at helping AI developers and deployers navigate the AI Act’s risk classification system and understand the specific requirements that apply to their AI systems.

The competition was structured in two phases. The first phase (conducted remotely) required teams to submit a mock-up demonstrating how their solution could simplify compliance. The second phase took place in person in Pisa, where teams tailored their solutions to a real-world scenario and presented them to a jury.

This scenario featured “SmartByte” a startup developing an AI-powered algorithm called CyrcAIdian to monitor sleep patterns, which faced critical compliance questions under the new AI Act. Participants had to determine how CyrcAIdian’s classification as a fitness tracker or a medical device would impact its regulatory obligations and commercialization strategy.

Learn more about the winning solution on our blog:

Learn more about the winning solution on our blog:

1st place: The Data Jurists

2nd place: the AI-Act Navigators

3rd place: the AI-WARE team

Best Presentation: AI-Renella

During the final LeADS event in Pisa, participants had the opportunity to explore the posters presented by ESRs, which showcased the final results of their work conducted across each Crossroad. We have included the posters in this newsletter so that readers can explore the research findings and insights for themselves.

Barbara Lazarotto – ESR 7: Can Business-To-Government Data Sharing Serve The Public Good?

Barbara Lazarotto – ESR 7: Can Business-To-Government Data Sharing Serve The Public Good? development, focusing on regulatory frameworks governing this practice under the European Health Data Space. It explores how transparency and the protection of personal data are balanced with the need for innovation in healthcare. By analysing real-world examples and the application of General Data Protection Regulation principles, particularly transparency, this study assesses whether health data can be re-used for AI-driven healthcare advancements without undermining individuals’ data protection rights.

development, focusing on regulatory frameworks governing this practice under the European Health Data Space. It explores how transparency and the protection of personal data are balanced with the need for innovation in healthcare. By analysing real-world examples and the application of General Data Protection Regulation principles, particularly transparency, this study assesses whether health data can be re-used for AI-driven healthcare advancements without undermining individuals’ data protection rights. Xengie Doan – ESR 9: Collective Consent, Risks and Benefits of DNA Data Sharing

Xengie Doan – ESR 9: Collective Consent, Risks and Benefits of DNA Data Sharing

Christos Magkos – ESR 11: Persοnal Health Infοrmatiοn

Christos Magkos – ESR 11: Persοnal Health Infοrmatiοn

Property (and Why You Still End Up Paying With It)

Property (and Why You Still End Up Paying With It) violations of fundamentalrights. It critiques the ethical foundation of AI governance, observing that moral objectives are being prioritized over legal obligations, leading to conflicts with the rule of law. The essay calls for a re-evaluation of AI governance strategies, urging a realistic approach that respects citizens, legal precedent, and the nuanced realities of social engineering, aiming to provide an account of some of the dangers in governing artificial intelligence—with an emphasis on Justice.

violations of fundamentalrights. It critiques the ethical foundation of AI governance, observing that moral objectives are being prioritized over legal obligations, leading to conflicts with the rule of law. The essay calls for a re-evaluation of AI governance strategies, urging a realistic approach that respects citizens, legal precedent, and the nuanced realities of social engineering, aiming to provide an account of some of the dangers in governing artificial intelligence—with an emphasis on Justice. Even if we may not realize it, AI’s presence in our lives is increasing at a great pace. Most technological services we use nowadays are driven by AI, and that could be good news since AI’s aims to improve the quality of the services. Unfortunately, to work well, AI greedily feeds on user data: AI models collect, process, and store a great deal about us, which is a problem if such sensitive information is leaked. This chapter discusses that this risk of AI’s leaking personal data is not only hypothetical and suggests how to mitigate it.

Even if we may not realize it, AI’s presence in our lives is increasing at a great pace. Most technological services we use nowadays are driven by AI, and that could be good news since AI’s aims to improve the quality of the services. Unfortunately, to work well, AI greedily feeds on user data: AI models collect, process, and store a great deal about us, which is a problem if such sensitive information is leaked. This chapter discusses that this risk of AI’s leaking personal data is not only hypothetical and suggests how to mitigate it.

Digital identity is important for businesses and governments to grow. When apps or websites ask us to create a new digital identity or log in using a big platform, we do not know what happens to our data. That is why experts and governments are working on creating a safe and trustworthy digital identity. This identity would let anyone file taxes, rent a car, or prove their financial income easily and privately. This new digital identity is called Self-Sovereign Identity (SSI). In our work, we propose an SSI-based model to evaluate different identity options and we then prove our model value on the European identity framework.

Digital identity is important for businesses and governments to grow. When apps or websites ask us to create a new digital identity or log in using a big platform, we do not know what happens to our data. That is why experts and governments are working on creating a safe and trustworthy digital identity. This identity would let anyone file taxes, rent a car, or prove their financial income easily and privately. This new digital identity is called Self-Sovereign Identity (SSI). In our work, we propose an SSI-based model to evaluate different identity options and we then prove our model value on the European identity framework. AI is ubiquitous in public and private sectors used for optimizing tasks through complex data analysis. While the technology is promising, its use in high-risk domains raises concerns about trust, fairness, and accountability. This chapter analyzes AI backed automated decision-making systems being used by public authorities and advocates for a strict governance framework based on risk management and algorithmic accountability practices focused on safeguarding fundamental rights and upholding the rule of law by adhering to the principles of natural justice.

AI is ubiquitous in public and private sectors used for optimizing tasks through complex data analysis. While the technology is promising, its use in high-risk domains raises concerns about trust, fairness, and accountability. This chapter analyzes AI backed automated decision-making systems being used by public authorities and advocates for a strict governance framework based on risk management and algorithmic accountability practices focused on safeguarding fundamental rights and upholding the rule of law by adhering to the principles of natural justice. Maciej Zuziak – ESR 6: How to Collaboratively Use Statistical Models in a Secure Way

Maciej Zuziak – ESR 6: How to Collaboratively Use Statistical Models in a Secure Way

Qifan Yang – ESR 1: Your Data Rights: How does the GDPR affect the Social Media Market?

Qifan Yang – ESR 1: Your Data Rights: How does the GDPR affect the Social Media Market? evaluation. It highlights the increasing importance of data quality in modern, data-driven organizations, especially in light of evolving regulatory frameworks such as the GDPR, Open data EU regulation. The paper begins by addressing inconsistencies

evaluation. It highlights the increasing importance of data quality in modern, data-driven organizations, especially in light of evolving regulatory frameworks such as the GDPR, Open data EU regulation. The paper begins by addressing inconsistencies Data portability, often seen as straightforward, is a complex issue in the digital era, intersecting with law, technology, and economics. This contribution uses a BBQ analogy to illustrate the challenges of ensuring data remains functional and meaningful across systems. Examining regulations like GDPR, the Digital Markets Act, and the Data Act, highlights gaps in addressing data semantics and content completeness. The piece advocates for a holistic, integrated approach to enhance data portability, emphasizing the need for a Legality Attentive Data Scientist (LeADS) approach to drive innovation and user empowerment in the digital marketplace.

Data portability, often seen as straightforward, is a complex issue in the digital era, intersecting with law, technology, and economics. This contribution uses a BBQ analogy to illustrate the challenges of ensuring data remains functional and meaningful across systems. Examining regulations like GDPR, the Digital Markets Act, and the Data Act, highlights gaps in addressing data semantics and content completeness. The piece advocates for a holistic, integrated approach to enhance data portability, emphasizing the need for a Legality Attentive Data Scientist (LeADS) approach to drive innovation and user empowerment in the digital marketplace.

intellectual exchange, the event featured an Innovation Challenge, a thought-provoking conference titled “Legally Compliant Data-Driven Society,” and a Poster Walk showcasing research across the four Crossroads of the LeADS project.

intellectual exchange, the event featured an Innovation Challenge, a thought-provoking conference titled “Legally Compliant Data-Driven Society,” and a Poster Walk showcasing research across the four Crossroads of the LeADS project. complexities of AI regulations.

complexities of AI regulations.