ESRs Bárbara and Onntje at Digital Legal Talks 2022

On November 24th 2022, ESRs Bárbara Lazarotto and Onntje Hinrichs participated in the Digital Legal Talks in Utrecht. The event was the third annual conference organised by the Digital Legal Lab, which is a research network that is constituted of Tilburg University, University of Amsterdam, Radbound University Nijmegen, and Maastricht University. The topic of the conference was dedicated to law and technology and focused on a wide variety of topics such as responsible data sharing, enforcement and the use of technology, the recently proposed and passed EU data laws, and involved as keynote speakers Sandra Wachter from the Oxford Internet Institute and Thomas Streinz from NYU School of Law (for more information consult the program of the conference). Onntje and Bárbara both presented their research in relation to the Data Act Proposal (DA proposal). However, each focussed on different aspects. Whereas Onntje discussed what the DA does or does not do for consumers, Bárbara focused on the Business-to-Government data sharing aspects of the proposal.

third annual conference organised by the Digital Legal Lab, which is a research network that is constituted of Tilburg University, University of Amsterdam, Radbound University Nijmegen, and Maastricht University. The topic of the conference was dedicated to law and technology and focused on a wide variety of topics such as responsible data sharing, enforcement and the use of technology, the recently proposed and passed EU data laws, and involved as keynote speakers Sandra Wachter from the Oxford Internet Institute and Thomas Streinz from NYU School of Law (for more information consult the program of the conference). Onntje and Bárbara both presented their research in relation to the Data Act Proposal (DA proposal). However, each focussed on different aspects. Whereas Onntje discussed what the DA does or does not do for consumers, Bárbara focused on the Business-to-Government data sharing aspects of the proposal.

In his presentation “The Data Act Proposal: A Missed Opportunity for Consumers”, Onntje presented his perspective on why the DA might fail its objective of “empowering individuals with regard to their data” (a more extensive version of his findings has been published in Privacy in Germany 06/2022 (PinG)). His presentation therefore focussed on the B2C data sharing provisions in chapter II of the proposal. The DA contains various provisions that are supposed to strengthen consumer protection such as (i) an obligation to design IoT products in a way that data are accessible by default (ii) pre-contractual information obligations on data generated by IoT products (iii) obligation for data holders to make data available free of charge upon request by consumers (iv) data holders can only use any non-personal data generated by IoTs on basis of a contract (v) right to share data with third parties (including various provisions that offer protection to consumers in their relation to third-parties).

Onntje concluded, however, that these provisions might not be sufficient to empower consumers with regard to ‘their’ data as claimed by the proposal – instead, they might strengthen (or at least confirm) the position of data holders as de-facto owners of IoT-generated data in B2C relations: First, the access rights are likely to be designed in a very restrictive way. Instead of allowing consumers to port data, data holders will likely only be obliged to ‘make data available’. Second, the obligation imposed on data holders to conclude a contract as the basis for use of any non-personal data generated by IoT devices would not provide any meaningful protection to consumers. Due to the complete lack of any safeguards with regard to that contract, it would not solve any problems related to control but only legitimize them. By confirming the de facto ownership position of data holders as de facto owners of IoT data, the DA would therefore fail to create any incentives for data holders to design their products in a more privacy-friendly way. To provide more meaningful protection, the DA could have introduced, for instance, provisions that sanction unfair contract terms with regard to devices that excessively collect and process data which are entirely unrelated to the product or the services it provides.

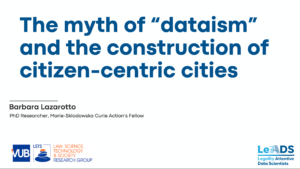

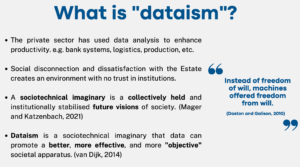

In her presentation “The implications of the Proposed Data Act to B2G data sharing in smart cities”, Barbara made an analysis of how business-to-government data sharing provisions of the DA can be applicable to smart cities contexts. Chapter V creates a general obligation to make privately held data available based on “exceptional needs”, highlighting the circumstances in which those needs would exist, namely  public emergencies and situations in which the lack of data prevents the public sector from fulfilling a specific task in the public interest. In a general analysis of Chapter V, Barbara expressed that some recent modifications made by the Czech Presidency gave the Proposal a different dimension especially when it comes to the possibility of the development of smart cities. The adoption of a more detailed Recital 58, which now defines guidance on what can be considered lawful tasks in the public interest, opens the path to a discreet development of smart cities. The modifications also have enhanced the connections of the Act with other regulations, especially with the GDPR, demanding stricter personal data protection measures by the public sector, a matter that was a source of criticism by many opinions on the Proposal.

public emergencies and situations in which the lack of data prevents the public sector from fulfilling a specific task in the public interest. In a general analysis of Chapter V, Barbara expressed that some recent modifications made by the Czech Presidency gave the Proposal a different dimension especially when it comes to the possibility of the development of smart cities. The adoption of a more detailed Recital 58, which now defines guidance on what can be considered lawful tasks in the public interest, opens the path to a discreet development of smart cities. The modifications also have enhanced the connections of the Act with other regulations, especially with the GDPR, demanding stricter personal data protection measures by the public sector, a matter that was a source of criticism by many opinions on the Proposal.

Overall, Bárbara concluded that the recent changes can be considered a good step when it comes to enhancing personal data protection in business-to-government data sharing and also creating a lawful basis for the sharing of data in the public interest which can benefit the development of smart cities. Nevertheless, she highlighted that the Proposal still falls short of having a broad impact on these contexts since it does not fight against the power imbalance between municipalities and the private sector, nor creates measures against the “silo mentality” that infiltrates data-sharing provisions between the businesses and governments in smart cities contexts. Bárbara was also invited to participate in a Panel on “Transparency Rules for Digital Infrastructures” organized by Max van Drunen and Jef Ausloos. The Panel started with a general comparison between the Data Act and Digital Services Act on access to data for research purposes. The issues with labeling “vetted researchers” and what it means to be a researcher was topic of discussion. At last, the “dependence on data” to conduct research was debated.

Early Stage Researcher Barbara Lazarotto (ESR 7) presented her research at the

Early Stage Researcher Barbara Lazarotto (ESR 7) presented her research at the

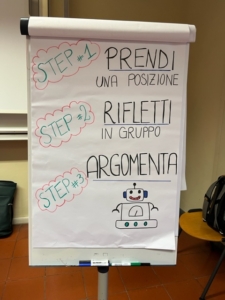

The procedure was so designed to reach goal 2 of the game, to critically evaluate facts and put forward the most convincing argumentation, and 3, to learn how democratic debates develop by mimicking the actual rules of parliamentary democracies (albeit – in a slightly simplified version).

The procedure was so designed to reach goal 2 of the game, to critically evaluate facts and put forward the most convincing argumentation, and 3, to learn how democratic debates develop by mimicking the actual rules of parliamentary democracies (albeit – in a slightly simplified version).