This blogpost was originally published on the Robotics & AI Law Society’s Blog at https://blog.ai-laws.org/going-beyond-the-law-or-back-again/

Abstract:

The relationship between law and ethics in the European Union’s regulatory policy of artificial intelligence remains unclear. Ambiguity persists specifically in whether ethics will be employed in a hard or soft fashion. Such an ambiguity creates fundamental problems in determining whether a given AI system is compliant with the proposed Artificial Intelligence Act (AIA), which is contrary to the objective of legal certainty cited by the AIA. This blog post is an attempt to uncover that problem which academic literature has not yet addressed.

The AIA is a product of the European strategy towards regulating AI which has developed over the past four years and has always embodied a peculiar relationship between law and ethics. In 2018, the European Commission released their Communication outlining the beginnings of a European strategy for AI. Around that time, the High-Level Experts Group on AI (HLEG) was established with the goal of advising the Commission, and their 2019 Ethics Guidelines for Trustworthy AI , which took the fundamentals rights of the Charter to be the subject matter of both their ethical and legal approach, became central to the EU strategy. Then in 2020, the official White Paper on Artificial Intelligence was released, stating that the European approach “aims to promote Europe’s innovation capacity in the area of AI while supporting the development and uptake of ethical and trustworthy AI across the EU economy.” Later in 2020, the European Parliament formally requested the Commission to submit a proposal for a regulation specifically addressing ethical principles for the development, deployment, and use of artificial intelligence. And finally, in 2021, the Artificial Intelligence Act was proposed, and the Commission asserted that the Regulation ensures the protection of ethical principles.

The Distinction Between Hard and Soft Ethics

During those years, many AI ethics researchers had been concerned with the development of principles and struggled with their practical application to the problems of AI, while some others had taken the problem of application to be an irredeemable flaw with the principle-based approach and so called for a virtue ethics or question-based approach to be developed instead. That debate will continue to evolve and is not the direct subject matter of this post, nor are the ongoing debates about how best to operationalize ethics in SMEs or larger corporations. Instead, this blog is an effort to uncover the ambiguous relationship between law and ethics in the European Union’s regulatory policy of AI. That ambiguity can be traced back to a misapplication of Luciano Floridi’s “soft ethics” presented here, which asserted that governance should be composed of institutional governance, regulation, and ethics. He and others argued that ethics should play a role in governance because legal requirements are often necessary but not always sufficient to guide society toward a beneficial outcome, and that ethics can act as an anticipatory mechanism to identify and respond to the impacts of emerging technologies. According to his theory, digital ethics is constituted by hard ethics, which changes or creates law through the implementation of ethical values in legislation or judicial opinions; and soft ethics which considers what should be done “over and above the existing regulation, not against it, or despite its scope, or to change it, or to by-pass it.” In other words, while soft and hard ethics cover the same normative ground, the distinction between the two rests on their relationship to law.

Following that distinction between hard and soft ethics, compliance with a given law is equivalent to the compliance with the hard ethic that gave it shape, and thus the analysis used to determine whether some behavior is compliant is the legal method an attorney would use in a given jurisdiction. But, because ethics that are soft go “over and above” the law, a different methodology would presumably be required to determine whether some behavior is in alignment with a given set of values. When reading the forthcoming paragraphs keep this in mind: the relationship between hard ethics and law is characteristic of how scholars typically understand the cyclical nature of ethical norms being translated into regulation, similar to how the EU data protection acquis developed from shifts in the perceptions about and values of privacy in the public or how medical professional ethics developed in response to bioethics. The reader’s reaction then may be to assume that the exact same is happening in the EU regulatory approach to AI, that ethics is simply influencing regulators to act, and that they are embedding the values into the regulation (hard ethics). However, the policy outlined below suggests that compliance with the AIA may require a separate ethical analysis that takes the law as the starting point and goes beyond. It’s important to note that Floridi’s soft ethics approach was originally conceived as a “post compliance” tool (not a legal requirement), yet through the adoption of soft ethics in the regulatory policy, this blog will suggest that it may have (intentionally or unintentionally) taken on a new and confused life as a compliance mechanism.

The Cause of the Ambiguity: A Sketch of the EU Regulatory Development on AI

The consensus in the European Union has largely been that AI should meet both a legal and ethical requirement. In the HLEG’s Ethics Guidelines for Trustworthy AI, they state that AI should be legal, ethical, and technically and socially robust. The HLEG derived their ethical principles from the EU Charter of Fundamental Rights. Distinguishing between the legal and ethical requirements, the HLEG explained that after legal compliance is achieved, “ethical reflection can help us understand how the development, deployment and use of AI systems may implicate fundamental rights and their underlying values and can help provide more fine-grained guidance when seeking to identify what we should do rather than what we (currently) can do with technology.” The HLEG cited Floridi’s soft ethics approach, asserting that there “adherence to ethical principles goes beyond [emphasis added] legal compliance.” Yet, the question of which direction one should go beyond fundamental rights jurisprudence remains and is striking.

The demarcation between legal and ethical requirements carried on into the European Parliament’s Resolution C 404 , which distinguished between “legal obligations” and “ethical principles,” advising that high-risk AI systems be subject to the mandatory compliance of both. Yet, they also observed that “ethical principles are only efficient where they are also enshrined in law. . .” Note that if ethics is only efficient where it is enshrined in the law and compliance is mandatory with ethical principles by law, then the distinction between “legal obligations” and “ethical principles” collapses (hard ethics). However, the Parliament went on to call for common criteria to be developed for the granting of European certificates of ethical compliance, which suggests, again, that an ethical analysis (as opposed to a legal one) may need to be developed to satisfy ethical principles. Finally, the Parliament formally requested the Commission to submit a proposal for a regulation specifically addressing ethical principles for the development, deployment, and use of artificial intelligence.

The proposed AIA responded to that request and the Commission asserted that the Regulation ensures the protection of ethical principles. The general objective of the legislation was to “ensure the proper functioning of the single market by creating the conditions for the development and use of trustworthy artificial intelligence [emphasis added] in the Union.” The proposed minimum requirements for high-risk AI systems set out in Chapter II are based on HLEG’s work on Trustworthy AI. In the heart of the AIA, references to ethical principles are non-existent but references to fundamental rights abound. Remember that the ethical principles of Trustworthy AI are derived from fundamental rights.

Questions Going Forward

Thus, the question remains: does compliance of high-risk AI systems with Chapter II of the AIA require a separate ethical analysis, based on fundamental rights, that goes beyond legal compliance with fundamental rights law? Does the mandatory compliance mean that this creates a new philosophy for the interpretation of fundamental rights? And as inquired upon earlier, which direction does one go beyond to achieve compliance? Fundamental rights law must often strike a balance between rights, and the calculations in those decisions create the most controversial of legal judgements. Going beyond, especially obligatorily, has wide reaching consequences, and would likely be highly politically charged. Clarification and information for whether and how to go beyond must be given by policymakers.

What such an analysis might look like remains unclear. As mentioned at the top, AI ethics researchers are still debating how to best develop and apply principles or whether to even do so at all. The field is far from establishing consensus. Yet, underpinning AI ethics is the idea that law can benefit from ethics for various reasons. Yet, the opposite might also be true: that an ethical analysis or method could be modeled off the legal structure that allows for the application of normative propositions to specific sets of facts (perhaps something akin to Hart’s rule of recognition, change, and adjudication as a starting point).

The workshop was divided into three sessions the first Session focused on thesis presentations, Session 2 focused on presentations on the topic of cybersecurity and Session 3 was entered on material, logical and systemic security. Prof. Jessica Eynard, one of the supervisors of the LeADS Project, and Giorgia Macilotti one of LeADS collaborators presented their research in Session 2 the topic Sécurité(s) et identité(s) numérique(s).

The workshop was divided into three sessions the first Session focused on thesis presentations, Session 2 focused on presentations on the topic of cybersecurity and Session 3 was entered on material, logical and systemic security. Prof. Jessica Eynard, one of the supervisors of the LeADS Project, and Giorgia Macilotti one of LeADS collaborators presented their research in Session 2 the topic Sécurité(s) et identité(s) numérique(s).

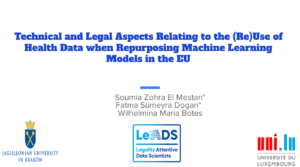

when this technology is used in health data tasks, the practice becomes even more complex. because health data qualifies as sensitive data which attracts stricter rules regarding its processing.

when this technology is used in health data tasks, the practice becomes even more complex. because health data qualifies as sensitive data which attracts stricter rules regarding its processing.

Also on April 11-13, Aizhan was present offline at the 33rd Madrid International Conference on “Law, Education, Marketing and Management” (LEMM-23).

Also on April 11-13, Aizhan was present offline at the 33rd Madrid International Conference on “Law, Education, Marketing and Management” (LEMM-23).