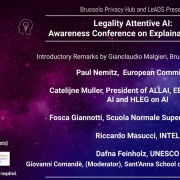

Legality Attentive AI: Awareness Conference on Explainability of AI

28th of January, 2022

Webinar organised by LeADS in collaboration with the Brussels Privacy Hub

Summary of the Conference authored by ESR Robert Poe

For Privacy Day 2022, LeADS (Legality Attentive Data Scientists) and the Brussels Privacy Hub collaborated on the Awareness Conference on the Explainability of AI. Together, the group put on a panel of distinguished speakers: Paul Nemitz of the European Commission; Catelijne Muller, President of ALLAI, EESC, OECD for AI, and HLEG on AI; Dafna Feinholz of UNESO; Riccardo Masucci of Intel; and Fosca Giannotti of Scoula Normale Superiore and CNR.

From the start, meaningful debate arose. And, until the last word, each speaker expressed themselves seriously and eloquently.

Dafna Feinholz spoke both of the great benefits and risks of AI and of the recent UNESCO Recommendations on the Ethics of AI (Nov. 2021). The Recommendations are admirable, advocating from design to deployment, an ethical approach benefiting all actors involved in an AI projects lifecycle.

Paul Nemitz marked the recent change of direction by the EU, from a focus on ethics to an establishment of legislation. Paul stressed that, in his opinion, these Codes of Conduct (professional ethics) were created to defend companies against regulatory action. Further, he argued that we need binding rules to have fair competition in the EU, and that companies should not be allowed to wash their hands of responsibility for artificial intelligence systems when they have released them in the marketplace.

Catelijne Muller thoughtfully rejected the commonly held belief that regulation would stifle innovation, saying, “First of all, they don’t, they promote innovation because they level the playing field.” She added that regulations do not only give much needed legal certainty to corporate actors, but regulations also produce standards that will push companies to develop more sustainable and worthwhile AI systems. Catelijne continued by asking the audience to keep in mind the limited capabilities of AI systems today. She ended with a hopeful legal remark on explainability: where a human is already required by law to explain something, the AI is bound as well.

Riccardo Masucci celebrated the consensus the EU has built around the general ethical principles that should guide the development of AI but lamented that convergence on technical solutions has not yet happened. He added that future investments must be put into standardization.

Fosca Giannotti, coming from a technical background, enthusiastically welcomed the responsibility placed on developers of AI, arguing that it brings forth new scientific challenges; and that, in the context of explainability, this responsibility is changing AI research: ensuring a focus on the synergistic collaboration between humans and AI systems. However, she expressed the need for appropriate validation processes for such systems, which is difficult because it requires the evaluation of human-machine interactions (social-technical interactions).

Afterwards, during the discussion phase, a debate sprang forth around a tweet shared in the chat, “…you have to choose between a black box AI surgeon that cannot explain how it works but has a 90% cure rate and a human surgeon with an 80% cure rate that can explain how they work.” Nemitz referred to such hypotheticals as “boogeymen” used to argue against fundamental rights. Meanwhile Muller firmly confronted a commenter who asked whether a human surgeon could even explain themselves, saying that she would certainly hope so, and that these types of hypotheticals are nonsensical.

Over 70 attendees came to celebrate Privacy Day with an afternoon packed full of thought-provoking interaction. Thank you to everyone involved at LeADS and the Brussels Privacy Hub for hosting such an event.